From Firefighting to Forecasting: A Software Engineer's Guide to API Performance Prediction

Master the art of API performance prediction and say goodbye to those 2 AM wake-up calls. Learn how to analyze real-world metrics and build reliable forecasting models.

The Hidden Cost of Reactive API Monitoring

The dreaded 2 AM alert. The checkout API is running slow, again. Orders are piling up, and the operations team is getting anxious. As an on-call engineer, you've been here before - watching dashboards, scrambling to scale services, and wondering if there was a better way.

There is. And it starts with understanding your data. Follow along, as we use a Jupyter notebook to help us make sense of data.

Understanding Our API Performance Data

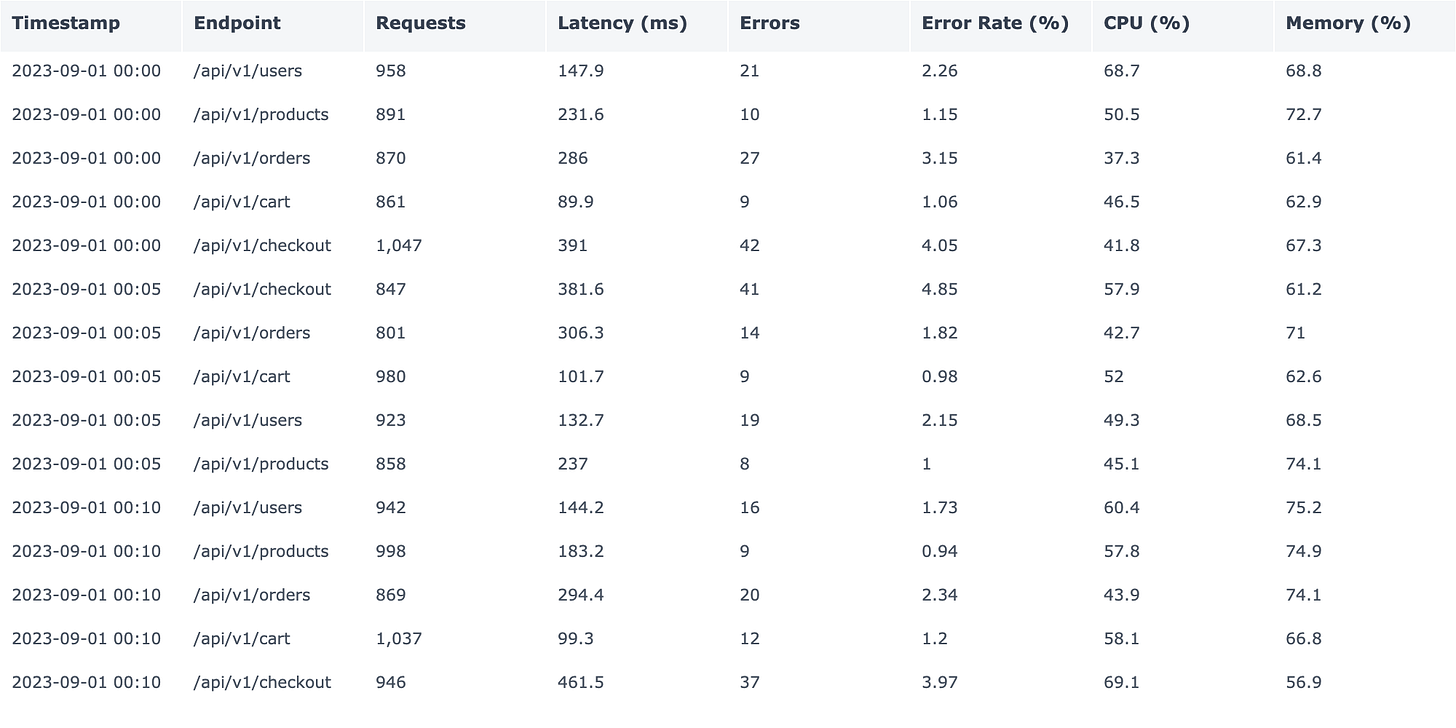

Before diving into predictions, let's examine what API monitoring data actually looks like. Here's a snapshot of 15-minute interval metrics across our e-commerce platform's core services. The data is simulated and contains a month worth of metrics solely for the purpose of this article!

Assuming you have all libraries installed, run the Data Generation and Initial Analysis section of the notebook.

def create_synthetic_data():

# Generate timestamps for 3 months at 5-minute intervals

timestamps = pd.date_range(start=datetime(2023, 9, 1),

end=datetime(2023, 12, 1), freq='5T')Our data generator carefully models:

Daily traffic waves using sine functions

Weekly business cycles

Realistic noise with controlled randomness

Metric correlations (like latency following traffic)

Output should look something like this:

Congrats, you have just generated synthetic data for exploratory analysis.

Let's break down what we're looking at:

Requests: The number of API calls in a 5-minute window

Latency: Response time in milliseconds (ms)

Errors: Count of failed requests

Error Rate: Percentage of requests that failed

Resource Usage: CPU and memory utilization percentages

In the next section, we'll dive deeper into these metrics, visualizing patterns that will become the foundation of our prediction model. We'll see how daily and weekly cycles affect our API performance, and how different metrics correlate with each other.

Stay tuned as we transform this raw data into actionable insights that will help you sleep better at night.

API Traffic Patterns and Analysis

Looking at raw numbers can be overwhelming. Let's visualize three critical patterns that emerged from our analysis of three months of API traffic data.

Run the Visualizing Traffic Patterns section of the notebook.

def create_daily_pattern(df):

# Group by hour and calculate means

hourly_stats = df.groupby('hour').agg({

'requests': 'mean',

'latency_ms': 'mean'

})This aggregation shows us the true shape of our daily traffic pattern by:

Averaging out noise and anomalies

Preserving the relationship between metrics

Highlighting the key patterns we care about

It should spurt out three images.

Daily Traffic Analysis

Our 24-hour traffic pattern reveals a fascinating story. Traffic starts climbing around 5 AM, reaching its peak by mid-morning and maintaining high levels until early afternoon. But here's what's particularly interesting: latency doesn't just mirror traffic - it shows a slight lag. This lag represents our systems scaling up to meet demand, and it's a crucial window for predictive action.

Key insights from the daily pattern:

Peak traffic occurs between 5-7 hours into the day

Latency follows traffic with a 15-30 minute delay

The quietest period (around hour 20) shows both minimal traffic and lowest latency

Weekly Traffic Distribution

Different endpoints tell different stories. Our weekly pattern analysis shows distinct behaviors across our API endpoints:

Tuesday and Wednesday show the highest consistent traffic

All endpoints follow remarkably similar patterns

Weekend traffic drops by approximately 20%

Sunday shows an interesting uptick, possibly from users preparing for the week ahead

This consistency across endpoints suggests system-wide patterns rather than endpoint-specific issues - a crucial insight for capacity planning.

Metric Correlations

The correlation matrix reveals the intricate relationships between our metrics:

Strong positive correlation between requests and latency (dark blue)

CPU utilization shows moderate correlation with error rates (medium blue)

Memory utilization remains relatively independent (lighter colors)

This heat map is particularly valuable for prediction because it shows us which metrics can serve as early warning indicators. For instance, the strong correlation between requests and latency suggests we can predict latency spikes by monitoring request patterns.

Implementing Prediction Models

These visualizations reveal more than just pretty patterns - they're the foundation of our prediction strategy. By understanding these rhythms, we can:

Anticipate daily load peaks before they occur

Plan resource allocation around weekly patterns

Use leading indicators to predict potential issues

In the next section, we'll transform these insights into a practical prediction model. We'll show you how to use these patterns to stay ahead of performance issues and finally get a full night's sleep.

Next: Building Your First API Performance Prediction Model

Linear Regression Fundamentals

Before we build our prediction model, let's understand the simplest yet powerful statistical tool we'll use: simple linear regression.

Remember that strong correlation we saw between requests and latency? That's not just an interesting observation - it's the foundation of our prediction strategy. Simple linear regression helps us quantify this relationship with a straightforward equation:

latency = m * requests + bWhere:

mis the slope (how much latency changes per additional request)bis the base latency (the theoretical latency with zero requests)

Think of it like this: if you've ever adjusted an autoscaling rule saying "add a new server for every 1000 additional requests", you've actually been doing a form of linear regression in your head! You're assuming a linear relationship between load and required resources.

The beauty of simple linear regression is that it:

Captures basic cause-and-effect relationships

Is easy to explain to stakeholders

Provides quick, reasonable predictions

Forms the foundation for more sophisticated models

Building an API Performance Prediction Model

Now comes the exciting part - turning our insights into actionable predictions. We'll build a simple yet powerful model that predicts API latency based on request volume.

Look at the code in the Building the Prediction Model section of the notebook.

# Prepare data for modeling

X = df['requests'].values.reshape(-1, 1)

y = df['latency_ms'].values

# Split and train

model = LinearRegression()

model.fit(X_train, y_train)Our model is intentionally simple - we're looking for the basic relationship between traffic and latency. The reshaping and splitting steps ensure we:

Have proper input format for scikit-learn

Keep a test set for validation

Can measure our prediction accuracy

Once you run it, it will print these metrics and also generate the visualization image.

Model Metrics:

Mean Squared Error: 13788.23

R-squared Score: 0.088

Coefficient: 0.135

Intercept: 94.88Our model reveals some fascinating patterns that aren't immediately obvious from raw data:

There's a clear positive relationship between requests and latency (red line in top graph)

However, there's significant variance in actual latency for any given request load (spread of blue dots)

The model predicts a baseline latency around 150ms, increasing gradually with load

Most interestingly, we see a "spread pattern" where variability increases with higher request volumes

Looking at the actual vs. predicted plot (bottom), we can see that our simple model:

Tends to overpredict latency for lighter loads

Underpredicts for heavier loads

Shows considerable scatter around the perfect prediction line (dashed)

This teaches us something crucial about API performance: while there's definitely a relationship between requests and latency, other factors are clearly at play. The spread pattern suggests that as load increases, other variables (perhaps database connections or cache hit rates) become increasingly important.

For practical use, this means:

We can predict general trends in latency based on request volumes

We should be conservative with our predictions, especially at higher loads

We need to consider additional factors for more accurate predictions

Actionable Metrics: Making Predictions Useful

The model metrics reveal an important truth about API performance prediction - it's not perfect (R-squared score of 0.088), but it's still incredibly useful. Here's how to put these predictions into action:

1. Set Proactive Alerts

Instead of waiting for latency to spike, set alerts based on request volumes:

def should_alert(current_requests, threshold_ms=300):

predicted_latency = 0.135 * current_requests + 94.88 # Our model

return predicted_latency > threshold_ms22. Plan Scaling Windows

Remember the 15-30 minute lag we discovered between traffic spikes and latency increases? Use this window:

Monitor request volumes at 5-minute intervals

If

should_alert()returns true, trigger scaling before latency spikesSet cool-down periods based on our weekly pattern analysis

3. Build Safety Margins

Given our model's tendency to underpredict at high loads:

Add 20% buffer to predictions above 1500 requests/minute

Set conservative thresholds for critical endpoints like checkout

Monitor prediction accuracy and adjust buffers accordingly

Common Pitfalls in API Performance Prediction

Our simple linear regression model is a powerful starting point, but let's explore some common pitfalls to avoid as you implement your own prediction system:

1. Single Metric Dependency

While request count and latency correlation is strong, relying solely on this relationship can be misleading. For instance, a sudden increase in database-heavy requests might cause performance issues even with normal traffic levels. Always monitor the nature of requests, not just their quantity.

2. Missing Caching

Our model doesn't account for cache behavior, which can dramatically affect performance patterns. A cache miss under high load can trigger a cascade of slow responses that the model didn't predict. Consider tracking cache hit rates alongside request metrics to refine predictions.

3. Ignoring System Boundaries

APIs rarely exist in isolation. External dependencies like databases, caches, or third-party services can introduce latency that appears random to our model. Some key considerations:

Database connection pool limits

Third-party API rate limits

Network latency variations

Message queue backpressure

4. Fixed Time Windows

Using fixed time windows (like our 5-minute intervals) can mask micro-spikes in traffic. A service might receive 1000 requests in a minute, but if they all arrive in the same second, we have a very different performance scenario. Consider using smaller sampling windows during peak periods.

5. Static Thresholds

The assumption that performance thresholds remain constant across all conditions rarely holds true. What works during normal operations might fail during high-traffic periods. Consider implementing dynamic thresholds that adapt to:

Time of day

Day of week

Seasonal patterns

Deployment events

Understanding these pitfalls helps explain why our model's R-squared score isn't higher and guides us toward more sophisticated prediction approaches. However, even with these limitations, the current model provides valuable insights for proactive performance management.

In this next article, we'll explore how to enhance our model by incorporating these additional factors and handling the increased variability at higher loads.

But, before that please subscribe/share. It helps us all.