Model Context Protocol: AI Development Transformed For Good

MCP blurs the lines between software engineering and AI even more. Let's have a brief look at what they are calling the "USB-C" for LLMs.

What is Model Context Protocol?

MCP was open-sourced by Anthropic on 11/25/2024. Aimed at making life easier for custom implementations that provide context management for dealing programmatically with LLMs, it is now making waves in the developer circles.

The Python SDK repo has ~1.3K stars and the Typescript one has ~1.1K stars as of today.

The core innovation of MCP lies in its standardization of context management patterns. While LLMs have transformed how we build applications, they've also introduced unique engineering challenges around context windows, token management, and prompt engineering. MCP addresses these by providing a unified interface for context operations, similar to how USB-C standardized device connectivity. This protocol introduces typed context objects, deterministic context operations, and a consistent API surface that works across different LLM implementations.

The Protocol's Core Components

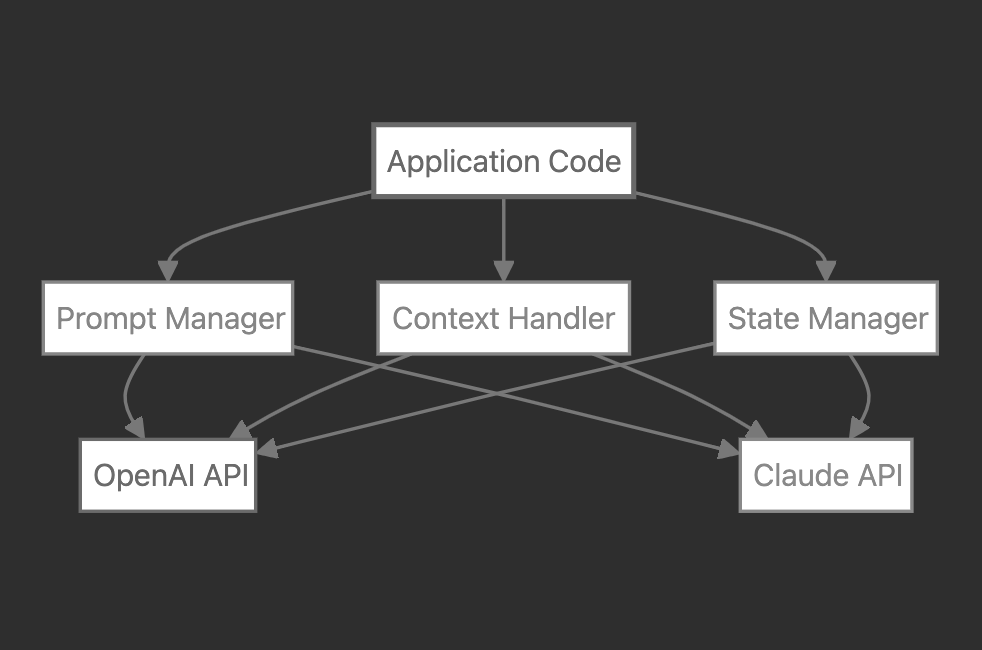

Model Context Protocol introduces a structured approach to context management through three foundational components.

Context Objects serve as the primary data structure, encompassing both the content and metadata of model interactions. These objects maintain strict immutability guarantees, ensuring consistency across operations. Every Context Object carries a type signature defining its structure and constraints, enabling static analysis and validation.

Operation Handlers form the second pillar of MCP, providing deterministic transformations of Context Objects. These handlers implement specific operations like context window manipulation, prompt template application, and state transitions. Each handler maintains pure function semantics, producing new Context Objects rather than mutating existing ones. This design choice enables robust composition of operations while preserving system invariants.

State Managers complete the protocol trinity, orchestrating the lifecycle of Context Objects across application boundaries. They maintain consistency guarantees similar to database transaction managers, ensuring atomic operations across context transitions. State Managers also implement the protocol's persistence semantics, providing durability guarantees for long-running conversations and multi-turn interactions.

Engineering Challenges MCP Solves

Traditional LLM implementations face critical challenges that impact system reliability and developer productivity. Context window management presents a fundamental challenge, where developers must manually track token counts, implement truncation strategies, and handle context overflow scenarios. This often leads to brittle implementations that fail under edge cases or require constant maintenance as models evolve.

Before MCP:

Prompt consistency poses another significant challenge, particularly in systems supporting multiple LLM backends. Without a standardized protocol, teams often implement custom solutions for prompt template management, leading to divergent implementations and increased maintenance burden. The lack of type safety in prompt construction frequently results in runtime errors that could be caught at compile time.

State persistence across model interactions introduces additional complexity, especially in distributed systems. Traditional implementations often resort to ad-hoc serialization strategies, leading to potential state corruption or inconsistency. MCP addresses these challenges through its protocol primitives, providing guaranteed consistency semantics and type-safe operations.

After MCP:

Coding Patterns Look Different Now

Model Context Protocol fundamentally transforms how developers build AI applications by introducing protocol-level guarantees for previously challenging operations. It standardizes context management patterns across different LLM implementations, providing type safety and consistency guarantees that were previously difficult to achieve. By treating context operations with database-like guarantees, MCP eliminates entire categories of common failures while reducing implementation complexity. This protocol-driven approach enables developers to focus on building features rather than managing technical debt from custom implementations.

These three simple code block are impactful and denote how in MCP, context object is created and how it supports retry strategies and state actions.

Architectural Evolution: Breaking New Ground

These implementation patterns reveal a deeper architectural transformation at play. While the code demonstrates immediate benefits in terms of type safety and error handling, the true impact of MCP emerges when we examine how these patterns reshape our fundamental approach to LLM system design. The protocol-first nature of MCP introduces architectural patterns that extend far beyond simple API standardization, establishing new paradigms for AI application architecture.

Protocol-First Design

Traditional LLM applications build from the ground up, starting with model interactions and gradually adding layers of abstraction. MCP inverts this approach, introducing protocol semantics as the foundational layer. Consider this architectural shift:

// Traditional layered approach

class TraditionalLLMSystem {

private contextManager: ContextManager;

private modelClient: LLMClient;

private stateStore: StateStore;

async process(input: string) {

const context = await this.contextManager.getContext();

try {

const response = await this.modelClient.generate(context + input);

await this.stateStore.update(response);

return response;

} catch (error) {

// Custom error handling per implementation

}

}

}

// MCP protocol-first design

class MCPSystem {

async process(input: string) {

return await this.protocolHandler

.withContext(input)

.withValidation(MCPConstraints.Standard)

.withRecovery(MCPRecovery.Transactional)

.execute();

}

}This architectural inversion produces systems where protocol guarantees exist at every layer rather than being bolted on as an afterthought.

Boundary Dissolution

MCP challenges traditional system boundaries by treating all components – from prompt management to state persistence – as protocol operations. This dissolves conventional separations between:

Application logic and model interaction

State management and error handling

Context manipulation and validation

The protocol establishes a unified semantic space where these traditionally separate concerns merge into protocol-level operations:

interface ProtocolOperation<T> {

// Protocol operations combine traditionally separate concerns

execute(): Promise<T>;

validate(): Promise<boolean>;

recover(): Promise<T>;

persist(): Promise<void>;

}

// Protocol handlers manage cross-cutting concerns

const operation = createOperation({

type: OperationType.Generate,

constraints: SystemConstraints,

recovery: RecoveryStrategy,

persistence: PersistenceModel

});Emergent Design Patterns

The protocol-first approach fundamentally transforms how we design LLM applications, introducing sophisticated patterns that reshape system architecture. Protocol Composition emerges as a primary pattern, where operations combine through well-defined protocol rules while preserving composition guarantees across combinations. This composition model ensures system invariants maintain integrity through transformations, providing a robust foundation for complex LLM interactions.

Semantic Boundaries represent another crucial pattern in MCP's architecture. The protocol defines clear interaction semantics, while the type system enforces semantic constraints at compile time. This creates a system where runtime behavior naturally follows protocol guarantees, eliminating entire categories of runtime errors that plague traditional implementations.

State Propagation completes the pattern trinity, introducing a unified approach to state management. The protocol takes responsibility for state transitions, extending guarantees across system boundaries. This propagation mechanism ensures recovery semantics apply uniformly throughout the system, transforming error handling from a per-component concern to a protocol-level guarantee.

Architectural Implications

These patterns reshape our fundamental assumptions about LLM system design. The Protocol as Foundation principle establishes that systems build up from protocol semantics rather than bolt them on afterwards. This approach ensures guarantees exist by construction, with behavior defined through protocol rules rather than implementation details.

The concept of Unified Semantic Space demonstrates how operations in MCP share consistent semantics. Traditional boundaries between components blur as protocol operations take center stage. Components no longer interact through custom interfaces but through protocol rules, creating a more cohesive and maintainable system architecture.

Perhaps most importantly, MCP enables what we might call Evolutionary Architecture. Systems can evolve through protocol extension while preserving existing guarantees. New capabilities integrate naturally within the protocol framework, with the protocol itself guiding architectural decisions. This evolutionary approach mirrors the maturation we've seen in database systems and distributed computing, where protocol-level guarantees transformed ad-hoc implementations into reliable, production-grade systems.

This architectural transformation suggests a future where LLM applications achieve the same level of reliability and maintainability that we expect from mature database systems. The protocol-first approach provides a foundation for building AI systems that can evolve while maintaining robust guarantees, marking a significant step forward in the maturation of AI system architecture.

So when are you getting started with MCP? Go rule the LLMS!